Self-Driving Cars and the Future of Auto Accident Liability

Self-driving cars are no longer a dream of the future. Although still in its infancy, on-road vehicle automation technology grows by leaps and bounds each year, and governments and private companies agree that the eventual transition to cars without human drivers is all but inevitable.

"Self-driving cars have gone from sci-fi fantasy to an emerging reality.”

That doesn’t mean the shift to driverless cars will be seamless. Automated vehicles from multiple companies have been involved in accidents, including a deadly Tesla crash in May. There are also numerous questions about privacy, regulation, insurance underwriting, and liability.

A future where software and hardware make more driving decisions could let people off the hook for crashes. But will automakers step up and pay for damages—or will they try and pass the blame to another party?

These are some of the issues ClassAction.com will be keeping a close eye on in the months and years to come as we aim to keep people in the know.

Have a question or complaint about a self-driving vehicle? Please let us know.

The Driverless Future is Now

President Obama recently wrote in a Pittsburgh Post-Gazette op-ed that, “self-driving cars have gone from sci-fi fantasy to an emerging reality.”

That reality is seen in the efforts of automakers such as Ford, Volvo, and Tesla—as well as tech companies like Google, Apple, and Uber—to roll out fleets of self-driving cars as soon as 2021.

In the same editorial, Obama announced a White House conference on October 13 in the Steel City to discuss new technologies and innovations. His administration has published a 15-point safety checklist it hopes automakers and tech companies will adopt before self-driving cars hit the road.

The incoming administration could have different policy goals for self-driving cars, but politics aside, the rising tide of autonomous vehicle technology makes it an issue that regulators can’t escape.

A Rush to Market?

Google, the leader in self-driving technology, has been working on autonomous cars since 2009. The company’s car program has already put nearly sixty self-driving vehicles on roads in four states and logged two million miles. Apple is also rumored to be working on its own self-driving car, while Uber and Lyft have plans to introduce driverless taxis. Lyft CEO John Zimmer boldly predicted, “By 2025, private car ownership will all but end in major U.S. cities.”

Ford and Volvo plan to mass-produce fully autonomous vehicles by 2021. Cars from Audi, BMW, Mercedes-Benz, Tesla, and other makers already feature sophisticated automation systems that can parallel park, follow a lead vehicle at a safe distance, and break to avoid collisions, among other features.

ClassAction.com attorney Mike Morgan, however, cautions that, in their zeal to become self-driving car market leaders, companies may not be focusing enough on big picture safety.

“The most dangerous part of self-driving cars is the rush to market,” said Morgan. “Everyone wants to be first to sell the most cars but the truth is the technology they are using is going to lead to catastrophic results.”

Degrees of Automation

In terms of legal repercussions, the distinction between fully autonomous and semi-autonomous technology is a significant one.

In the former, an automated driving system performs all driving tasks under all conditions. Such vehicles—which are still years away—would not even have a steering wheel.

Semi-autonomous vehicles, on the other hand, require some level of driver engagement, depending on the system capabilities. Tesla Motors Inc.’s Autopilot is a semi-autonomous feature that can control the car in certain conditions. Similar systems are planned for 2017 General Motors and Volvo vehicles as luxury options.

The Society of Automotive Engineers (SAE) developed standards for driving automation levels, ranging from 0 (no automation) to 5 (full automation). Tesla’s Autopilot—blamed for a deadly crash earlier this year—is officially Level 2.

Countdown to the Driverless Future

Experts disagree on when autonomous vehicles will become mainstream. The Insurance Institute for Highway Safety (IIHS) estimates that there will be 3.5 million self-driving vehicles by 2025, and 4.5 million by 2030, although it cautions that the vehicles will not be fully autonomous.

A majority of autonomous vehicle experts surveyed by technical professional organization IEEE said they expect mass-produced cars to lack steering wheels and gas/brake pedals by 2035.

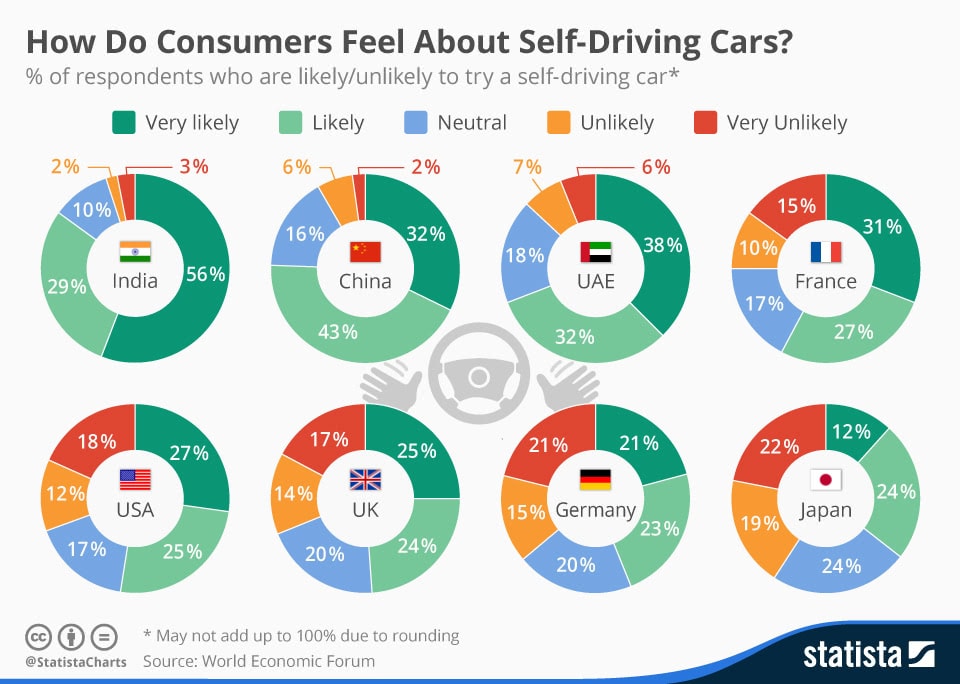

While a future of self-driving cars seems certain, there are roadblocks to their widespread adoption. The same IIEE survey that asked experts when autonomous cars might be widespread also asked about potential obstacles. Leading responses included legal liability, policymakers, and consumer acceptance.

Tesla Autopilot Mishap Could Spell Legal Trouble

A lawsuit over the deadly Tesla accident in May, in which a man’s autopilot did not recognize a tractor trailer turning in front of his Model S and his car smashed into it, is a strong possibility.

Tesla maintains, “Autopilot is an assist feature. You need to maintain control and responsibility of your vehicle.” But ClassAction.com attorney Andrew Felix counters, “Even the term ‘autopilot’ was used to coerce customers into a false sense of confidence and safety while this technology is still in its infantile stages.”

Tesla has not gone so far as to blame the man for the deadly crash. Should Tesla do so in the context of litigation, several legal arguments would be available to his family. But how they’d apply under the circumstances remains unknown.

“This is all new territory technologically and legally as well,” said Harvard Law School professor John C.P. Goldberg. “There are well established rules of law but how they apply to the scenario and technology will have to be seen.”

Volvo Promises to Assume Accident Liability. Will Others Follow Suit?

In the Tesla example, liability could come down to onboard vehicle log data. But semi-autonomous cars, which need some driver input, are very different from fully autonomous cars that assume little or no driver responsibility.

“Existing liability frameworks are well positioned to address the questions that will arise with autonomous cars.”

Legal experts generally agree that carmakers will assume blame for crashes when a computerized driver completely replaces a human one. For its part, Volvo has promised to assume full accident liability whenever its cars are in autonomous mode.

John Villasenor, a professor at UCLA and author of the paper, “Products Liability and Driverless Cars,” is confident that minor, sensible tweaks to current laws—not broad new liability statutes—will ensure that manufacturers are held accountable. “Existing liability frameworks are well positioned to address the questions that will arise with autonomous cars,” he told IEEE Spectrum.

A Bigger Slice of a Smaller Pie

Although this framework would seem to spawn a mountain of litigation for carmakers, the upshot is that automated technology is expected to drastically decrease accidents, the vast majority of which are caused by human error. After all, automation has already made cars safer. Electronic stability control systems, for example, have saved thousands of lives.

“From the manufacturer’s perspective,” according to tech policy expert and USC professor Bryant Walker Smith, “what they may be looking at is a bigger slice of what we all hope will be a much smaller [liability] pie.”

But what if the driverless technology is forced to make a decision between, say, crashing into a barrier and killing the car’s only occupant, or running over a pedestrian to avoid a crash?

New Age, Age-Old Dilemma

This is the type of scenario a game developed at MIT asks in a variation of the classic “trolley problem” thought experiment, which poses the following moral quandary: a runaway trolley is heading towards five unsuspecting workers. Do you pull a lever, sending the trolley down a different track where there’s only one worker, or do you do nothing and let it kill five?

MIT, in its “Moral Machine” game, presents people with a self-driving car with failed brakes and asks them to make a choice: swerve or stay straight; hit legal pedestrians vs. jaywalkers; hit a boy or an elderly man; etc.

That an autonomous car algorithm might have to make such a life and death decision shows how technology’s intersection with humanity is never black and white—or certain.

Lawmakers Forced to Play Catch-Up

Technology moves faster than the law and ethics.

In the first decades of the 20th century, when the number of cars on roadways exploded, there were no traffic laws, traffic signs, lane lines, or licensing requirements. The speeding vehicles terrified horses and ran over thousands of unaccustomed pedestrians, leading the state of Georgia to classify automobiles as “ferocious animals.”

Slowly, though, as the automobile became a staple of American life, governments figured out sensible legal solutions to the hazards cars were creating. A similar trajectory seems likely in response to whatever unintended consequences self-driving cars bring.

With the speed of technological change these days, the time may not be far off when human-driven cars are as quaint a concept as horse-driven carriages are today. Indeed, given the breakneck pace of innovation, the ink may not be dry on self-driving car legislation before lawmakers are grappling with flying cars.

Through all the changes, count on ClassAction.com to keep you up to speed.